![]()

This blog post is your ultimate guide to mastering MLOps, a critical skill set in today’s AI-driven world. Immerse yourself in 13 Hands-On Labs and Real-World Projects meticulously crafted to give you practical expertise in building, deploying, and managing robust machine learning pipelines.

Gain hands-on experience with industry-leading tools like MLFlow, DVC, GitHub Actions, and Docker. Learn how to automate model training, streamline data versioning, and implement CI/CD pipelines, while leveraging cloud platforms like AWS SageMaker and Azure ML for scalable deployments. Whether you’re an aspiring Machine Learning Engineer, Data Scientist, or DevOps Specialist, these comprehensive resources will empower you to enhance your skill set, boost your career prospects, and thrive in the competitive tech landscape.

Contents:

- Lab 1: Versioning and Tracking Models with MLFlow

- Lab 2: Implementing Data Versioning Using DVC

- Lab 3: Building a Shared Repository with DagsHub and MLFlow

- Lab 4: Building and Automating ML Models Using Auto-ML Tools

- Lab 5: Monitoring Model Explainability and Data Drift with SHAP and Evidently

- Lab 6: Creating and Deploying Containerized ML Applications

- Lab 7: Developing and Deploying APIs for ML Models

- Lab 8: Building and Deploying ML-Powered Web Applications

- Lab 9: Deploying Automated ML Services Using BentoML

- Lab 10: Implementing CI/CD Pipelines with GitHub Actions for MLOps

- Lab 11: Tracking Model Performance and Data Drift Using Evidently AI

- Lab 12: Ensuring Data and Model Integrity with Deepchecks

- Lab 13: Building an End-to-End MLOps Pipeline on AWS

3. FAQs

1. Hands-On Labs for MLOps

Lab 1: Versioning and Tracking Models with MLFlow

Objective: Learn how to version, register, and track machine learning models using MLFlow.

In this lab, you will explore the functionality of MLFlow for model lifecycle management. You’ll learn to log parameters, metrics, and artifacts for reproducible experiments. By the end of this lab, you will be able to effectively version and manage ML models with MLFlow.

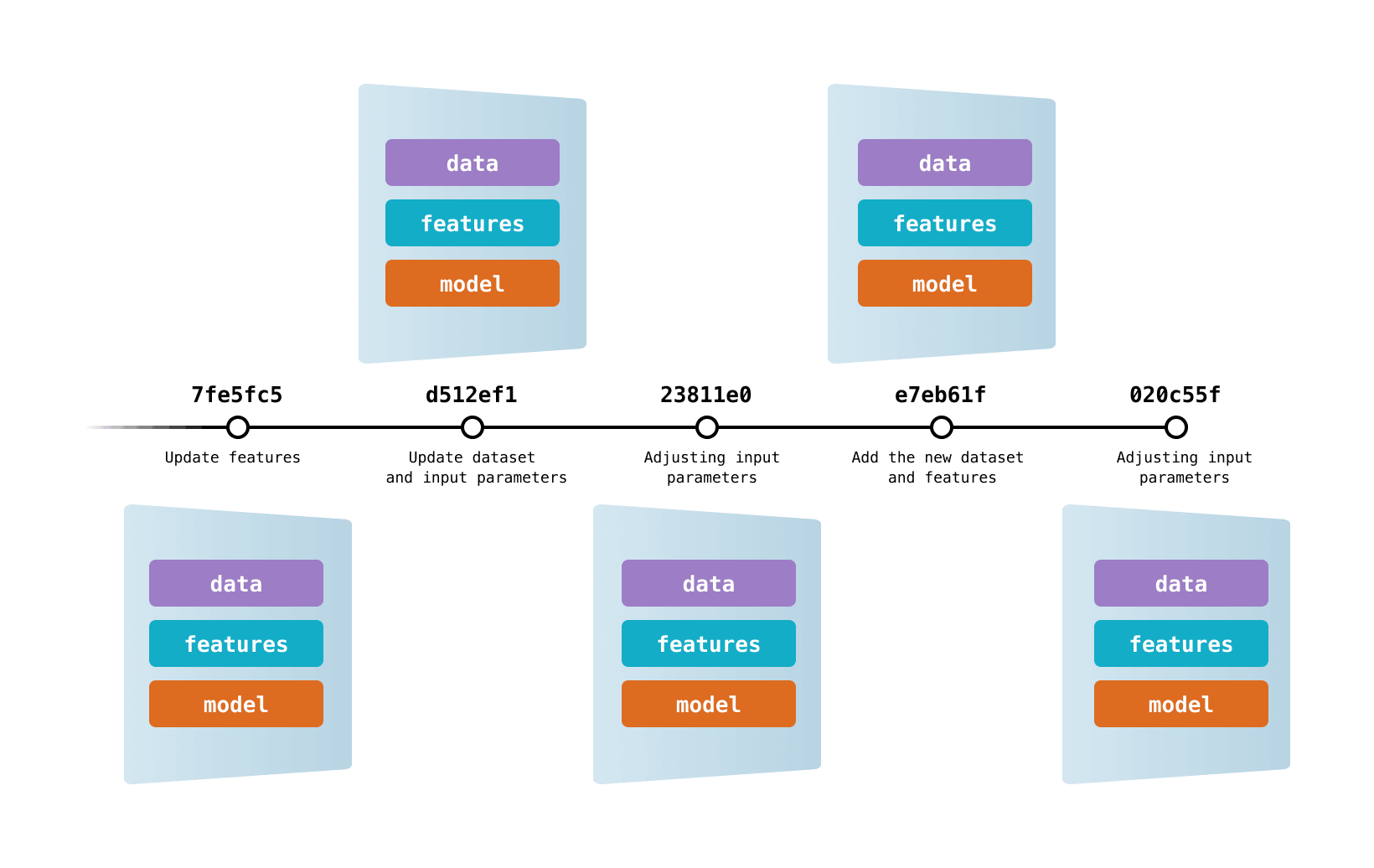

Lab 2: Implementing Data Versioning Using DVC

Objective: Understand data versioning and its importance in the MLOps pipeline using DVC.

In this lab, you’ll learn how to capture data versions, integrate DVC with Git, and use cloud storage for maintaining data and model artifacts. By the end of this lab, you will be confident in version-controlling datasets and models in collaborative environments.

Lab 3: Building a Shared Repository with DagsHub and MLFlow

Objective: Learn to create a shared repository for ML models and datasets to enable collaborative workflows.

In this lab, you’ll integrate DagsHub, DVC, Git, and MLFlow to set up a shared environment. This will help you manage and share model versions and data effectively. By the end of this lab, you will understand the collaborative aspect of MLOps repositories.

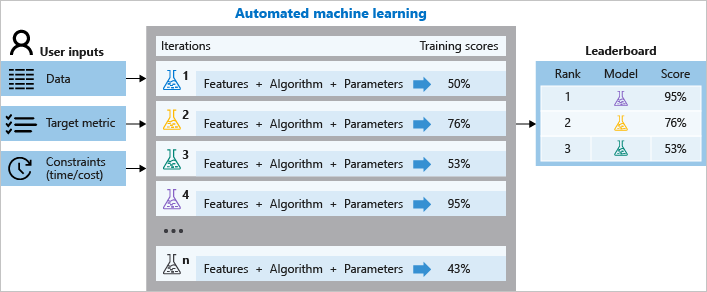

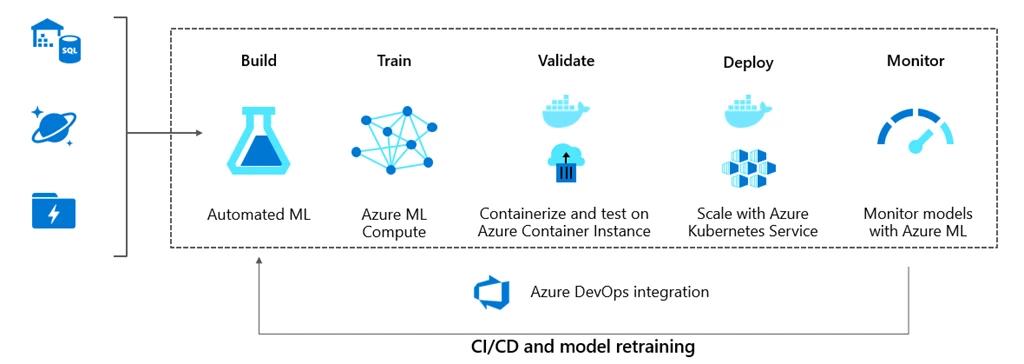

Lab 4: Building and Automating ML Models Using Auto-ML Tools

Objective: Automate the end-to-end machine learning workflow—from data preparation to model deployment—using popular Auto-ML tools.

This lab is designed to help you understand and apply Auto-ML tools for automating repetitive tasks in the ML pipeline. You will explore tools like PyCaret & Azure AutoML to streamline various stages of model development and deployment.

Lab 5: Monitoring Model Explainability and Data Drift with SHAP and Evidently

Objective: Explore tools like SHAP and Evidently to monitor model interpretability and detect data drift.

In this lab, you’ll analyze feature contributions using SHAP and learn how to track performance metrics and data quality with Evidently. By the end of this lab, you will understand the importance of explainability and auditability in production ML systems.

Lab 6: Creating and Deploying Containerized ML Applications

Objective: Package and distribute machine learning applications using Docker containers.

In this lab, you’ll learn how to create Docker images, build Dockerfiles, and deploy containerized ML workflows. By the end of this lab, you will be able to efficiently package and deploy ML systems.

Continue Reading: Docker Tutorial for Beginners

Lab 7: Developing and Deploying APIs for ML Models

Objective: Build APIs for machine learning models using FastAPI/Flask and deploy them on Azure Cloud.

In this lab, you’ll create APIs to serve predictions from ML models and use Azure resources to deploy them in production. By the end of this lab, you will be proficient in API development and deployment on Azure.

Continue Reading: Azure MLOps

Lab 8: Building and Deploying ML-Powered Web Applications

Objective: Create web applications with embedded ML models using Gradio/Flask and deploy them on Azure Cloud.

In this lab, you’ll learn how to integrate ML models into user-facing applications and deploy them using Docker on Azure. By the end of this lab, you will be confident in deploying ML-powered web applications.

Lab 9: Deploying Automated ML Services Using BentoML

Objective: Learn to deploy automated ML services using BentoML and Docker.

In this lab, you’ll develop and containerize ML services with BentoML and integrate them with MLFlow. By the end of this lab, you will understand how to build production-grade ML services.

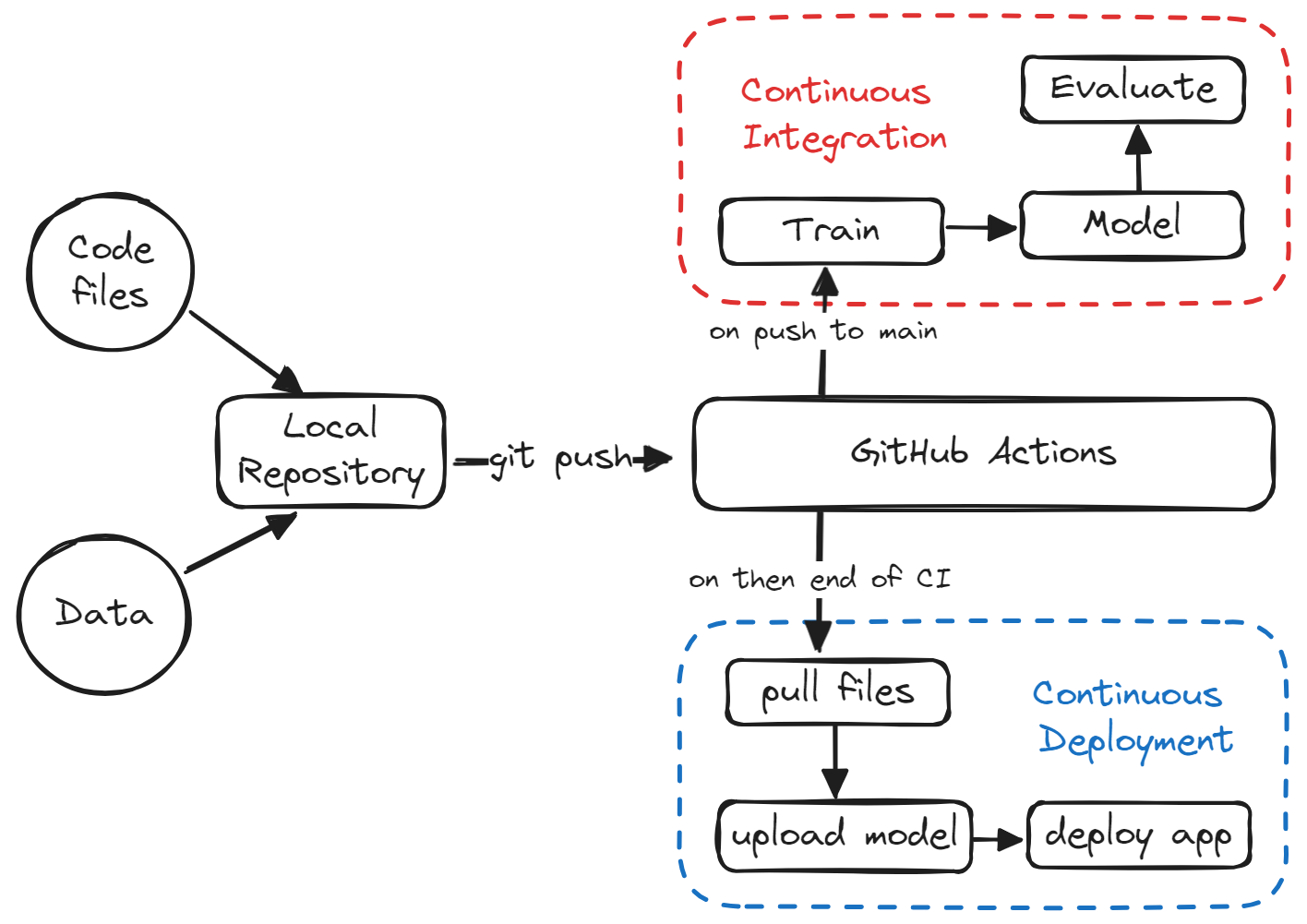

Lab 10: Implementing CI/CD Pipelines with GitHub Actions for MLOps

Objective: Understand how to use GitHub Actions and CML to implement CI/CD pipelines in MLOps.

In this lab, you’ll set up CI/CD workflows to automate model building, testing, and deployment. By the end of this lab, you will master continuous integration and deployment in MLOps pipelines.

Lab 11: Tracking Model Performance and Data Drift Using Evidently AI

Objective: Use Evidently AI to monitor model performance and detect data drift in production systems.

In this lab, you’ll configure monitoring dashboards and evaluate model quality in real time. By the end of this lab, you will have the skills to track and maintain production ML systems effectively.

Lab 12: Ensuring Data and Model Integrity with Deepchecks

Objective: Implement Deepchecks for comprehensive validation of data and model integrity.

In this lab, you’ll use Deepchecks’ suites to validate datasets and assess model performance. By the end of this lab, you will confidently ensure the integrity of ML systems.

Lab 13: Building an End-to-End MLOps Pipeline on AWS

Objective: Learn to design, build, and deploy a complete MLOps pipeline using AWS services, ensuring efficient model lifecycle management, scalability, and monitoring.

In this lab, you will gain hands-on experience with AWS services to implement an end-to-end machine learning operations (MLOps) pipeline.

![]()

2 Real-World Projects

Project 1: End-to-End Machine Learning Model Development and Deployment

Scenario:

You are working as a Machine Learning Engineer for ABC Analytics, a company providing predictive analytics solutions for various industries. The team is building a complete pipeline to develop, deploy, and monitor machine learning models efficiently. Your goal is to create an end-to-end solution covering everything from model development to deployment with CI/CD integration.

Description:

In this project, you will:

- Develop an ML Model from Scratch:

- Design and implement a machine learning model for a specific task (e.g., predictive analysis, classification, or regression).

- Experiment with feature engineering techniques to optimize model performance.

- Validate Code and Preprocess Data:

- Clean and preprocess the dataset to ensure it is ready for training.

- Validate your code for efficiency, readability, and accuracy.

- Versioning with MLFlow and DVC:

- Utilize MLFlow to track experiments, log metrics, and version models.

- Implement DVC to version datasets and models, ensuring traceability in the pipeline.

- Share Repository with DagsHub and MLFlow:

- Set up a shared repository for collaborative model management using DagsHub.

- Integrate MLFlow to log and visualize training runs and model versions.

- Build an API with BentoML:

- Package the trained ML model into a RESTful API using BentoML.

- Enable easy integration of the model into various applications.

- Create a Streamlit App:

- Develop an interactive Streamlit application to allow users to interact with the model, visualize predictions, and provide input data.

- Implement CI/CD with GitHub Actions:

- Set up a CI/CD pipeline using GitHub Actions to automate tasks such as code testing, model retraining, API deployment, and app updates.

FAQs

1. What is MLOps, and why is it important?

MLOps (Machine Learning Operations) is the practice of streamlining the development, deployment, and maintenance of machine learning models in production. It integrates DevOps principles with machine learning workflows to ensure scalability, reliability, and efficiency.

2. How do the hands-on labs help me prepare for real-world MLOps challenges?

Each lab focuses on solving practical challenges encountered in the MLOps lifecycle. For instance: Model versioning with MLFlow and DVC ensures reproducibility. CI/CD pipeline implementation with GitHub Actions automates workflows. Monitoring with SHAP and Evidently prepares you for post-deployment performance tracking. By the end of the labs, you’ll have the confidence to handle real-world MLOps scenarios.

3. Do I need prior experience in coding or programming?

Yes, basic to intermediate programming skills in Python are required. You should be comfortable with: Writing Python scripts, Working with ML libraries (Scikit-learn, TensorFlow, PyTorch), Using Jupyter Notebooks or IDEs like VS Code However, the course will guide you through MLOps-specific coding practices, and you will gain hands-on experience in versioning, automation, and deployment.

4. What are the prerequisites to start with these labs and projects?

While these labs are beginner-friendly, some basic knowledge of the following is helpful: Programming in Python. Fundamentals of machine learning (model training, evaluation). Familiarity with version control systems like Git. Basic understanding of cloud platforms like AWS or Azure.

5. Can I perform these labs if I’m new to MLOps?

Yes! The labs are structured to guide you from basic to advanced concepts. Beginner-friendly labs, such as data versioning with DVC and model tracking with MLFlow, lay a strong foundation before diving into more complex workflows like CI/CD pipelines and end-to-end pipelines on AWS.

6. How long does it take to complete the labs and projects?

On average: Each lab may take 1–2 hours, depending on your familiarity with the tools. The end-to-end real-world project may require 3-4 hours for implementation and fine-tuning. You can pace yourself based on your schedule.

7. Who is this course for?

This course is designed for data scientists, ML engineers, DevOps professionals, software engineers, and anyone interested in learning how to operationalize machine learning models efficiently.

Related References

- Join Our Generative AI Whatsapp Community

- Introduction to Generative AI and Its Mechanisms

- Mastering Generative Adversarial Networks (GANs)

- Generative AI (GenAI) vs Traditional AI vs Machine Learning (ML) vs Deep Learning (DL)

- Azure MLOps : Machine Learning Operations Overview

Next Task For You

Don’t miss our EXCLUSIVE Free Training on MLOps! 🚀 This session is ideal for aspiring Machine Learning Engineers, Data Scientists, and DevOps Professionals looking to master the art of operationalizing machine learning workflows. Dive into the world of MLOps with hands-on insights on CI/CD pipelines, ML model versioning, containerization, and monitoring.

Click the image below to secure your spot!

![Microsoft Agentic AI Business Solutions Architect [AB-100] | K21 Academy](https://test.k21academy.com/wp-content/uploads/2025/11/Microsoft-Agentic-AI-Business-Solutions-Architect-AB-100-Exam-Overview1.png)