![]()

Reinforcement Learning is an influential branch of Machine Learning. It is used to solve interacting problems where the info observed up to time t is taken into account to decide which action to require at time t + 1. In this blog, we are going to cover everything about Reinforcement Learning.

In this blog, I’m going to cover:

- What Is Reinforcement Learning (RL)?

- What is Policy Search?

- What is Learning?

- How Is RL Similar to Traditional Controls?

- RL Workflow Overview.

- What is Environment?

- Model-Free RL Vs Model-Based RL.

What is Reinforcement Learning (RL)?

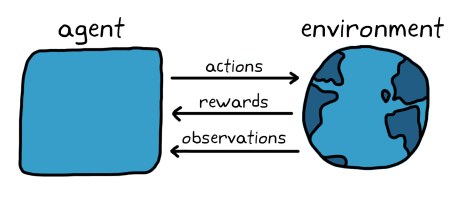

- In reinforcement learning, an agent (agent is our artificial intelligence) takes actions within a true or virtual environment, relying on feedback from rewards to find out the foremost suitable way to achieve its goal.

- A machine or a robot using reinforcement learning to solve an identical problem in different ways.

- It is also used for AI when training machines to perform tasks such as walking.

- Required outcomes provide the AI with reward, undesired with punishment. Machines learn through trial and error.

- The agent’s going to keep doing that was going to be taking actions changing the state, getting rewards changing action taking actions changing the state, and getting rewards.

- And by doing that process it’s going to be learning about what was going to be exploring the environment understanding what actions lead to good rewards and favourable states and what actions the two rewards an unfavourable state.

- The simplest way to think of reinforcement learning is like training a dog when you train the dog you to give it certain commands and if it obeys those commands then you give it a reach you give it like a biscuit or something if it doesn’t obey those commands you tell it that it’s a bad dog or you just don’t give it a treat.

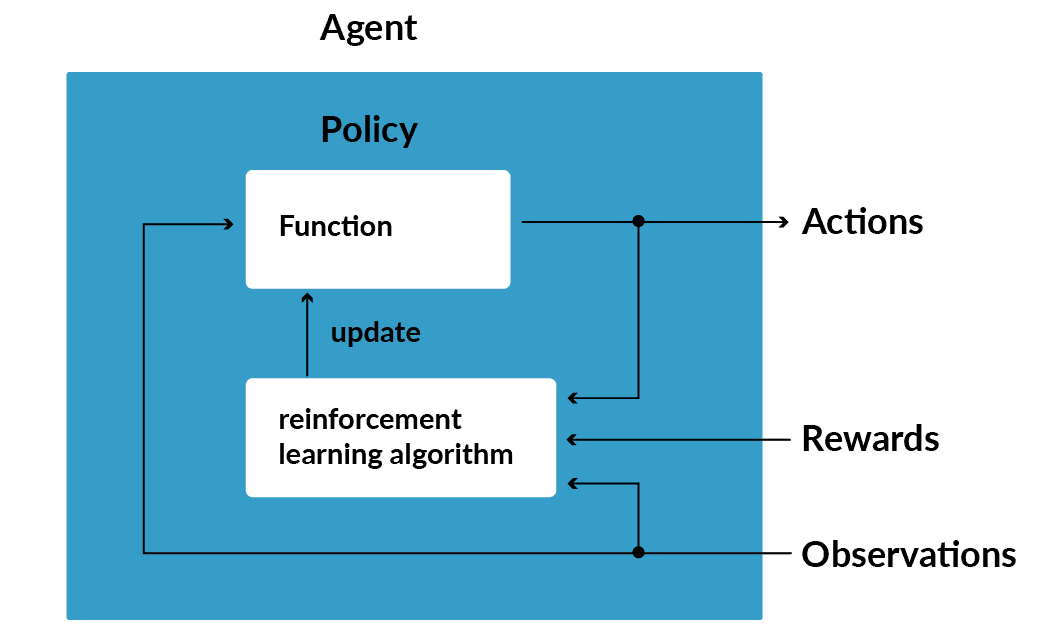

- Reinforcement Learning using a neural network policy.

What is the difference between reinforcement learning and supervised learning?

Reinforcement Learning (RL) involves training an agent to make decisions through trial and error, receiving feedback in the form of rewards or penalties. In contrast, Supervised Learning uses labeled data to train models to predict outcomes based on input-output pairs.

What are the types of reinforcement in reinforcement learning?

In reinforcement learning, there are three main types of reinforcement: positive reinforcement, where rewards are given for desired actions; negative reinforcement, where undesirable actions are avoided through the removal of negative stimuli; and **punishment**, which discourages unwanted behavior through penalties.

What is Policy Search?

- The algorithm a software agent uses to search out its actions is called its policy.

- The policy can be a neural network taking observations as inputs and outputting the action to require.

- The ultimate aim of the agent is to use reinforcement learning algorithms to find out the foremost appropriate policy as it interacts with the environment.

- So that, specified in any state, the agent will always take the most optimal action.

What is Learning?

- In reinforcement learning, Learning is that the term given to the method of regularly adjusting those parameters to converge on the optimal policy.

- We can specialize in putting in place an appropriate policy structure without manually tuning the function to induce the proper parameters.

- The computer learns the parameters on its own through a process of fancy trial and error.

Read: Learning Big Data & Hadoop

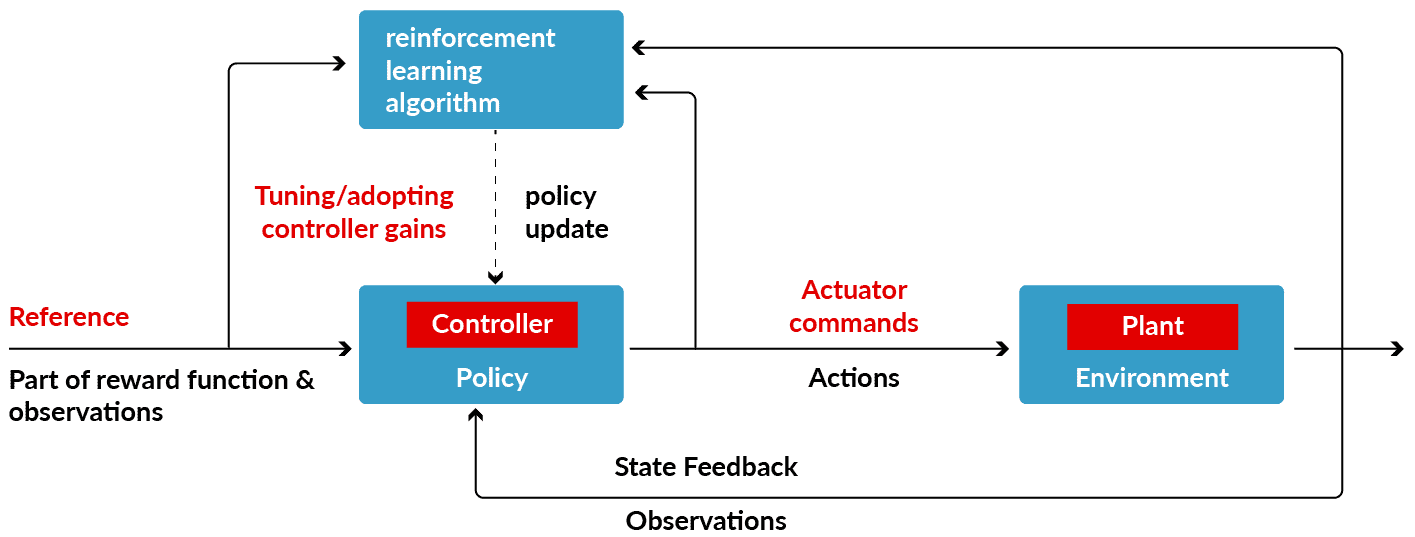

How Is RL Almost Like Traditional Controls?

- The target of reinforcement learning is comparable to the control problem; it’s just a special way and uses different terms to represent similar concepts.

- With both approaches, we would like to see the proper inputs into a system that may generate the required system behaviour.

- We are trying to figure out how to construct the policy (or the controller) that maps the observed state of the environment to the best actions.

- The feedback signal is the information from the environment, and the reference signal is built into both the environment observations and the reward function.

Read: Deep Learning on AWS

RL Workflow Overview

1) In reinforcement learning, we need an environment where our agents can learn. We need to choose what should exist within the environment and whether it’s a simulation or a physical setup.

2) We need to think about what we ultimately want our agent to do and craft a reward function that will incentivize the agent to do just that.

3) In reinforcement learning, we need to choose a way to represent the policy. Consider how we want to structure the parameters and logic that make up the decision-making part of the agent.

4) We need to choose an algorithm to train the agent that works to find the optimal policy parameters.

5) Finally, we need to exploit the policy by deploying it in the field and verifying the results.

What are the main points to consider in reinforcement learning?

Reinforcement learning (RL) can be computationally expensive, requiring vast amounts of data and time for training. It may struggle in environments with sparse rewards or complex state spaces. Additionally, RL models are sensitive to hyperparameter tuning and may lack interpretability.

What is Environment?

- In reinforcement learning, the environment is everything that exists outside of the agent.

- It is where the agent sends actions, and it is what generates rewards and observations.

- The environment is everything but the agent. This includes the system dynamics. In this way, most of the system is actually part of the environment. The agent is just a bit of software that is generating the actions and updating the policy through learning.

How does the CartPole environment in OpenAI Gym illustrate reinforcement learning?

The CartPole environment in OpenAI Gym demonstrates reinforcement learning by simulating a pole balanced on a moving cart. The agent learns to apply forces to keep the pole upright, receiving rewards for stability, showcasing the trial-and-error nature of RL.

Model-Free RL Vs Model-Based RL

- Model-based RL can lower the time it takes to learn an optimal policy because we can use the model to guide the agent away from areas of the state space that you know have low rewards.

- Model-free reinforcement learning is the more general case. For example, the agent doesn’t need to know the dynamics or kinematics of the walking robot. It will still find out how to gather the most reward without knowing how the joints move or the lengths of the appendages.

- With model-based RL, we don’t need to know the full environment model; we can provide the agent with just the parts of the environment we know.

- Model-free RL is popular right now because people hope to use it to solve problems were developing a model even straightforward – is difficult.

What is the conclusion about the effectiveness of reinforcement learning in decision-making and optimization?

Reinforcement learning (RL) significantly enhances decision-making and optimization by enabling systems to learn from interactions and adapt to dynamic environments. Its ability to maximize long-term rewards makes RL highly effective for complex tasks, improving efficiency and accuracy in various applications.

What are the disadvantages of using reinforcement learning?

Reinforcement learning (RL) can be computationally expensive, requiring vast amounts of data and time for training. It may struggle in environments with sparse rewards or complex state spaces. Additionally, RL models are sensitive to hyperparameter tuning and may lack interpretability.

Reinforcement Learning Textbook

Reinforcement Learning (RL) is a type of machine learning where an agent learns to make decisions by interacting with an environment. Through trial and error, the agent receives rewards or penalties, which guide its actions to maximize cumulative rewards. Key concepts include agents, states, actions, and rewards. RL is widely used in applications like robotics, gaming (e.g., AlphaGo), autonomous vehicles, and recommendation systems. With frameworks like OpenAI Gym and libraries such as TensorFlow and PyTorch, developers can implement RL algorithms to solve complex decision-making problems.

Reinforcement Learning in artificial intelligence

Reinforcement Learning (RL) in AI focuses on training agents to make sequences of decisions by interacting with an environment. Through trial and error, the agent learns to maximize rewards and minimize penalties. In RL, algorithms like Q-learning and Deep Q Networks (DQN) enable agents to improve their performance over time. This approach is widely used in areas such as robotics, gaming, self-driving cars, and recommendation systems. With Python libraries like TensorFlow and PyTorch, implementing RL models has become more accessible, driving innovation in AI applications.

Sutton Reinforcement Learning

Reinforcement Learning (RL), as introduced by Richard Sutton, is a powerful machine learning paradigm where agents learn to make decisions by interacting with an environment. Through trial and error, agents receive feedback in the form of rewards or penalties, guiding them to optimize their actions. Sutton’s work focuses on key RL concepts like value functions, policies, and exploration-exploitation trade-offs. RL has vast applications, including robotics, gaming, and autonomous vehicles, enabling systems to learn from experience and improve decision-making over time. It’s a core component of AI for solving complex sequential decision-making problems.

Sutton and Barto Reinforcement Learning

Reinforcement Learning (RL), as described by Sutton and Barto, is a type of machine learning where an agent learns to make decisions by interacting with an environment. The agent receives feedback in the form of rewards or penalties based on its actions. Over time, the agent aims to maximize cumulative rewards by learning from its experiences. This concept is widely used in areas like robotics, gaming, and autonomous systems, enabling machines to adapt and improve their performance through trial and error. Sutton and Barto’s work is foundational for modern RL algorithms.

Related References

- Visit our YouTube channel “K21Academy”

- Join Our Generative AI Whatsapp Community

- Introduction to Recurrent Neural Networks (RNN)

- Introduction To Data Science and Machine Learning

- Deep Learning Vs Machine Learning

- Introduction to Artificial Neural Network in Python

![Microsoft Agentic AI Business Solutions Architect [AB-100] | K21 Academy](https://test.k21academy.com/wp-content/uploads/2025/11/Microsoft-Agentic-AI-Business-Solutions-Architect-AB-100-Exam-Overview1.png)